Generative Gear Design

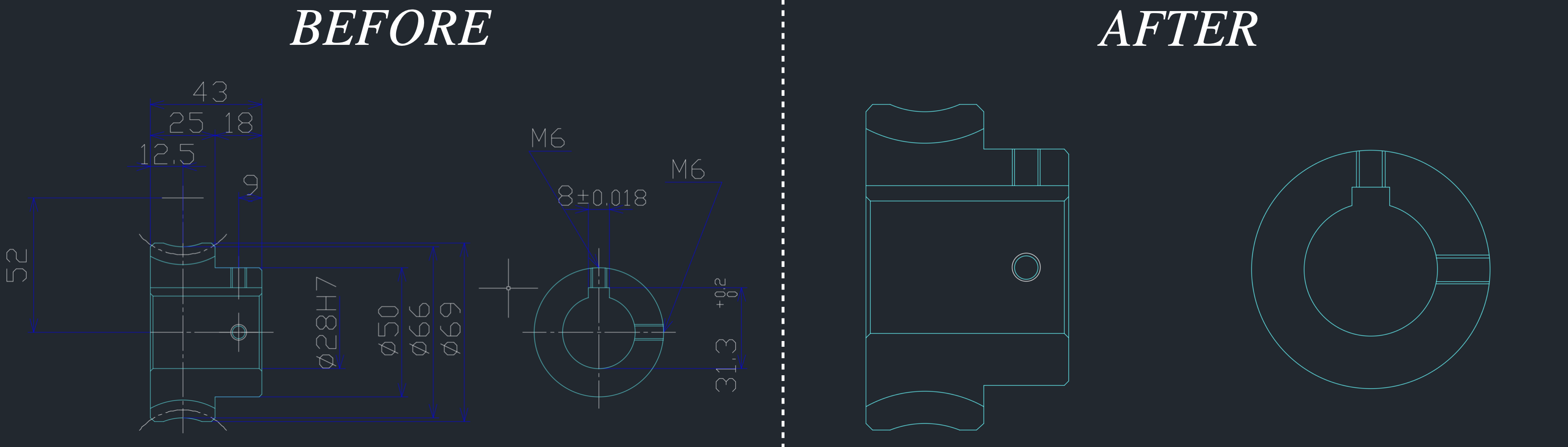

This is an ongoing personal project. The goal is to fine-tune an open-source large language model capable of automatically generating a DXF file of a gear.

After scraping 11,000 data samples from KHK Gears, I am now in the fine-tuning stage.

Dataset

Prompted: Structured input-output data designed to train a language model.

Unstructured: Dictionary-DXF pairs where the dictionary items quantify the aspects of the gear.

Each gear in the KHK collection contains quantitative specifications about its geometric construction—information that nearly uniquely determines each gear. Along with this information is a downloadable DXF document of the associated gear. I scraped all of this data using the BeautifulSoup and Selenium libraries.

One massive (literally) issue: The DXF files contain a massive amount of text—a corpus long enough to exceed the context window of open-source models that I (and my hardware) can run, let alone train.

Upshot: Most of the information in the DXF files is common across gears and/or could be easily reconstructed algorithmically after generating the unique gear-specific details.

Solution: I automated the scraping of all the DXF files in my dataset, retaining only the essential information.

Each DXF file in the original dataset contained, on average, 2665 lines. This was reduced to an average of 965 lines, achieving a compression rate of nearly 65%.

Model Training

There are several training methodologies that I have attempted:

Fine-tuning: Training an existing language model to produce DXF code.

Training from Scratch: Exploiting a truncated vocabulary and structured outputs to train a small autogrressive transformer.

I am continuing to explore methods for training a generative model which can generalize beyond the gear catalog. Seq2Seq models are a feasible option.

Check back soon for more progress!